Predictive Policing

Laura Del Vecchio

© Alexander @ stock.adobe.com

Foretelling Crime

In recent years, a growing number of police forces worldwide have been adopting an AI-based software that uses statistical data to orientate their decision-making processes, known as predictive policing. Even though predictive policing is not a technology itself but rather a notion conceptualized, according to Albert Meijer and Martijn Vessels in a recent report published in 2019, as "[...] the collection and analysis of data about previous crimes for identification and statistical prediction of individuals or geospatial areas with an increased probability of criminal activity to help develop policing intervention and prevention strategies and tactics," this approach is being ever more debated by authorities, law enforcement forces, and the general public.

Although no nation is putting into practice a national program dedicated to predicting policing approaches (Japan being the only country so far that has announced its interest in working with a national predictive software program), several predictive tools were developed and applied in many cities globally to assist police departments in criminal probabilistic. For example, the company Palantir has been testing predictive policing tools in cities in the United States such as Chicago, New Orleans, New York, and Los Angeles since 2012. While countries such as China, the United Kingdom, Germany, Denmark, India, and The Netherlands are reported to be expanding the use of predictive tools now and in the near future.

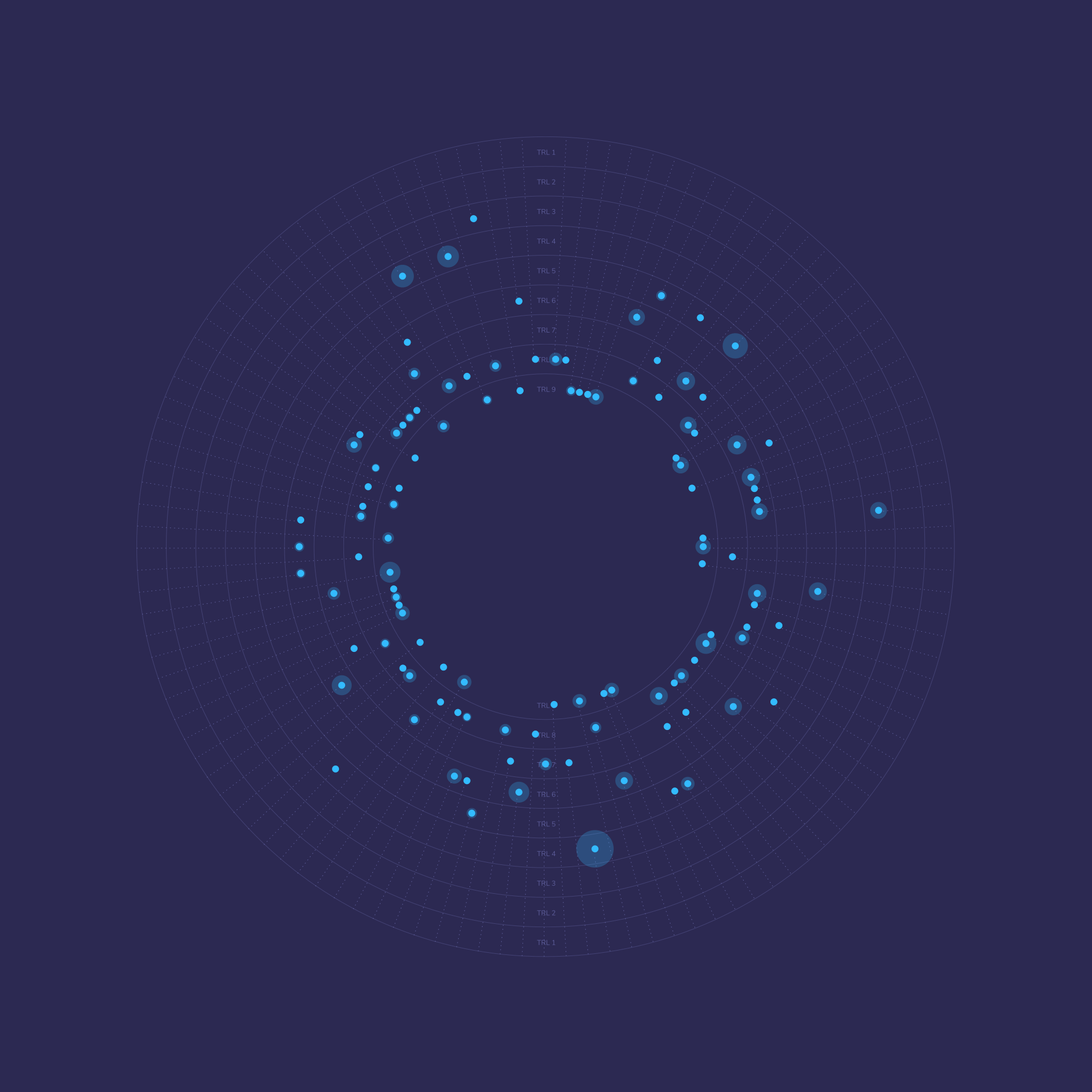

Predictive policing tools work with distinctive technologies. The basis is founded on Machine Learning Data Analytics, where an automated combination of statistical studies assess pre-established parameters and determines criminal probabilistic. The parameters harness the data obtained from Facial Recognition and Voice Recognition tools and determine the likelihood of areas prone to criminal activity with the help of Spatial Computing. Besides having access to such comprehensive data processors, predictive policing tools also analyze past and present communication channels from social media, financial transactions, and data extracted from crowdsourced platforms. By data-mining these information sources, the statistical analytics can flag individuals who are inclined towards criminal behavior or repeat certain illegal activities. This tool can help police forces intervene with preventive actions, such as counseling or community management programs to avoid potentially harmful behaviors, shifting the current law enforcement paradigm from a reactive to proactive policing.

The cities using this statistical model were reported to have decreased crime rates and improved safety in dangerous neighborhoods. In the United States, for example, with the use of historical data extracted from extensive databases, the police department of Richmond, Virginia, forecasted where gun firing would occur more on New Year's Eve, in 2013, and adapted their surveillance patrols based on the predictions received. This strategy rendered great results; the random gunfire decreased exponentially on that night, with 47% fewer cases and 246% of weapons seized by the police. In addition, the police force saved $15,000 in resources, thus being more cost-effective in comparison to policing without the use of such tools.

Nevertheless, many indicators show that predictive policing has crucial drawbacks preventing this software from being widely deployed. The use of predictive models in decision-making converts investigation processes once based on theory and extensive proof-examinations into a data collection and analysis-ranking procedure. This means that the predictions resulting from these models can possibly display skewed depictions of society and criminal behavior as they likely withdraw circumstances from their analysis.

Inherited Bias

Although entrusting computers with this intricate decision-making process can sound frightening, some argue that algorithms could be more objective and less prone to human biases. This assumes the systems have access to bias-free data to make their decisions. Yet, at the moment, this would be unlikely; there is widespread agreement that much of the underlying data reflects ingrained biases against minorities in poor neighborhoods based on AI pattern recognition that in most cases ends up pointing out correlating data as the cause of the problem. If an algorithm were to perceive that people from poor neighborhoods have a high likelihood of child abuse, for example, it would also conclude that ethnic minorities have a high probability of showing such behaviors as well. The algorithm could then associate both factors as indicators of abuse, thus following a dangerous, discriminatory path.

Although Machine Learning by its very nature is a continuous form of statistical discrimination, the kind of bias primarily addressed is of the unwanted variety, placing privileged groups at a systematic advantage and unprivileged groups at a systematic disadvantage. Examples include predictive policing systems that have been caught in runaway feedback loops of discrimination and hiring tests that end up excluding applicants from low-income neighborhoods or prefer male applicants to female ones.

Given the ubiquitous nature of algorithms and their deep-reaching impact on society, scientists are trying to help prevent injustice by creating tools that detect underlying unfairness in these programs. Even though the best technologies still serve as means to an end, these solutions are essential in paving the way towards establishing trust. The Algorithmic Bias Detection Tool, for example, encodes variational “fair” encoders, dynamic upsampling of training data based on learned representations**,** or preventing disparity through distributional optimization.

To bridge the gap and minimize potential conflicts based on bias and prejudice, an all-encompassing solution to algorithmic bias needs to be established in both legal and technical terms. Otherwise, an unchecked market with access to increasingly powerful predictive tools could gradually and imperceptibly worsen social inequality, perhaps even leading to a new era of information warfare. In light of this dystopian outcome, governments around the world, including Singapore, South Korea, and the United Arab Emirates, have announced AI ethics as a new board/committee/ministry to be integrated into their political system.

Towards Welfare

Predictive analytics, the same line of sophisticated pattern analysis used predictive policing, can be used to identify the families of those most needing assistance. By integrating databases of prisons, psychiatric services, public welfare benefits, drug and alcohol treatment centers, among others, the software predicts which cases carry the highest risk. This level of automated service helps field staff target investigations based on actual risk rather than human intuition or random sampling, potentially improving the allocation of scarce resources to make the most significant impact.

Predictive analytics can focus on risk during or following pre-existing preventive services, forecasting the likelihood of repeated poor behavior or actions while taking cross-organizational data into account to gain information on families waiting to have their cases analyzed. Since predictive analytics models learn from historical data, model accuracy and reliability depend on the quality of staff member assessments, findings, records, and documentation of case actions.

Future Perspectives

While predictive analytics offers an immense number of opportunities, implementation requires the use of open and publicly auditable systems. Initially, this technological solution could function more like an aid for lawyers, public defenders, and police officers than a standalone system. In a culture where many fundamental parts of society are being reorganized through computational techniques, human-based ethical governance of these solutions becomes increasingly important.

If algorithms are fed with diverse data extracted from different types of social and cultural aspects, it could probably avoid predictions that lead to unfair outcomes, such as discrimination against skin color, social class, or sexual orientation. It would reduce possible collateral damage to these digital analytics platforms on both fundamental rights and public trust.

Another criticism of these algorithms involves the idea of forecasting future behavior. The decision to investigate a certain individual should be based solely on actual allegations, not on future predictions. Due to these concerns, computational models are intended to supplement and not completely automate or replace good casework and administration, but provide data-driven insights into relative risks while allowing staff to override or accept recommendations. Concerns could also be raised as these systems get widely deployed, as well as the ability of officials to understand how they operate. The general difficulty for citizens in relying on automated decisions is another barrier yet to be overcome.

Advances in the field of deep learning allow real-time sentiment analysis of text, audio, and even video are providing new data-driven indicators that could be integrated with spatial prediction models. Advances in Machine Reasoning could allow future models to do more than make predictions: they could potentially offer explanations for violence and strategies for preventing it.