Morality Engine

Laura Del Vecchio

sebra @ adobe.stock.com

Imagine that public administrations run an automated moral entity for decision-making processes. The decisions being made are based on societal preferences collected from extensive databases that aggregate the most-common desirable choices. In the past, society faced ethical dilemmas, but now, a built-in computational engine deals with moral issues at runtime. After the implementation of this system, the society acquired a collective moral discernment that is accomplished with algorithmic perceptions of social justice. Now free from the burden of making the wrong choice —or at least of not having the responsibility of making them—, decision-makers, law enforcement forces, and the general public believe they have surpassed the limits of good and evil, and subsequently reached a consensus on common sense.

_

This scenario might likely draw us to assume that most widely shared opinions are believed to be the best way to rank moral reasoning. Yet, without even getting closer to the roots of morality and ethics that haunted and still plagues the minds of many philosophers, how do we expect to mathematically program notions of justice, free will, and the 'self' in a heterogeneous society that is in a continuous cycle of changes? With an exponential presence of AI-based technologies that function on a perpetual pattern of statistical judgment, how can we ascertain the best resolutions without excluding those perceptions that step outside the majority?

A decision must be made. How many times have we heard this before?

The future of morality is intrinsically related to automated systems' future and how we teach them to make decisions. However, humanity has not yet arrived at homogenous conclusions on morality that are readily available to be implanted into automation schemes. This dilemma adds extra challenges for developing automated systems as it lacks a global formal specification of what is "widely accepted" and what is "not." As the field of AI research is deeply reserved to specialists, and they are the ones responsible for distributing and ranking the data gathered, some experts argue that, even if mathematical notions can one day become the dominant values of morality, there is no universal rule on ethics and morality.

Iyad Rahwan, a computer scientist at the Massachusetts Institute of Technology in Cambridge, conducted, together with other data scientists and anthropologists, the largest survey so far on machine ethics and explains that "[...] people who think about machine ethics make it sound like you can come up with a perfect set of rules for robots, and what we show here with data is that there are no universal rules."

To shed light on this survey, we present below an overview explaining Rahwan's experience and outcomes.

Dilemmas of the Majority: An Experiment

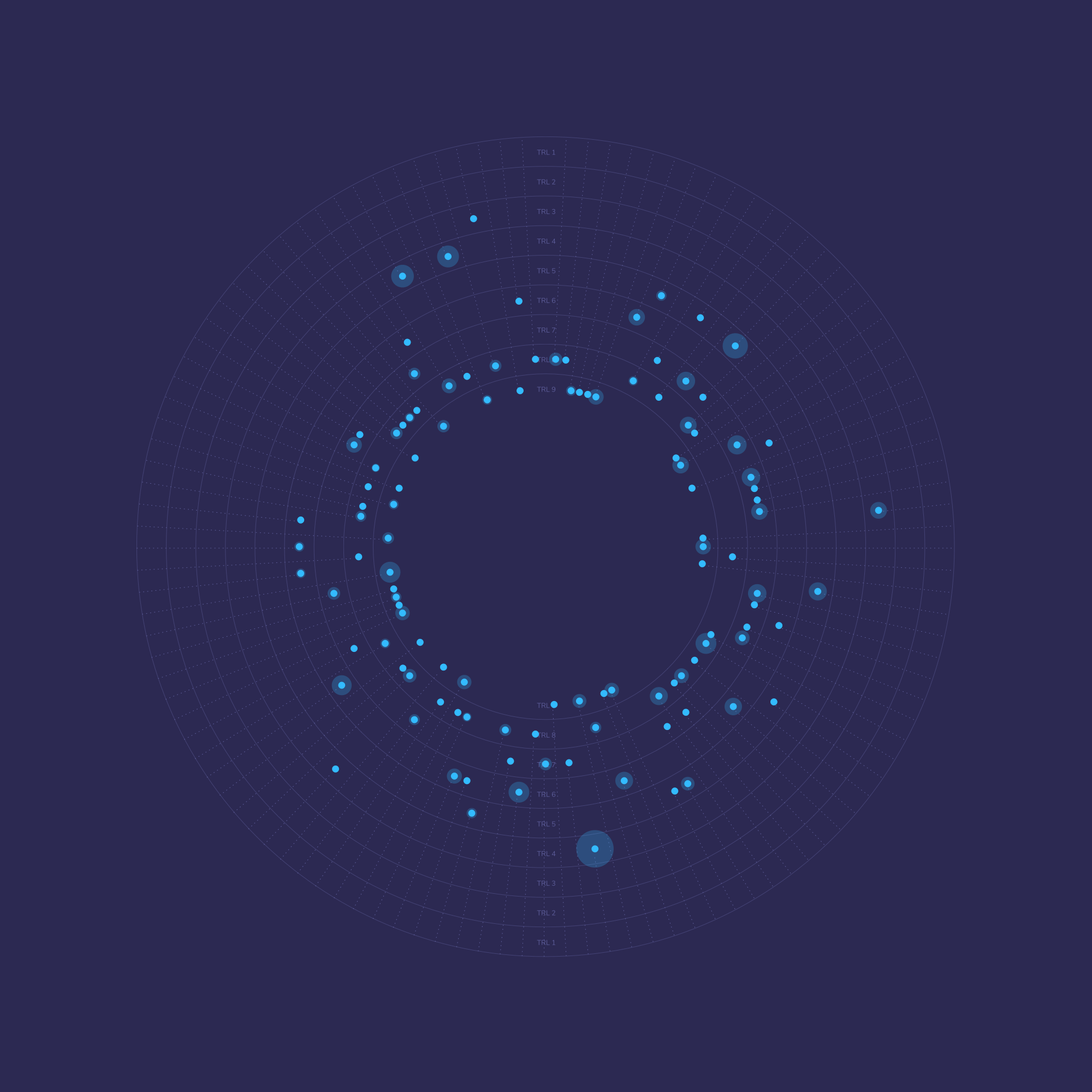

The survey, called the Moral Machine, placed 13 plots in which someone’s death was certain. The experiment was designed to guide the likelihood of machine behavior in the face of specific situations autonomous vehicles will be expected to encounter, such as deciding to hit a group of children or a homeless person instead. The survey was conducted in an experimental Mobile Crowdsensing Platform gathering 40 million declarations in ten different languages from respondents of 233 countries and territories. Participants were asked to decide who to spare in circumstances that comprised a whole set of variables: rich or poor, old or young, social class, more or fewer people. And so on.

The variations in decisions were documented, and the research team summarized global moral preferences based on a participant's demographics, displaying a comprehensive cross-cultural ethical variation. The differences in results and opinions uncovered deep cultural traits that influence whether a person decides to save the lives of some instead of others.

The analysis gathered from hundreds of nations showed considerable similarities in terms of ethical decisions that made the engineers divide the answers into three clusters. Cluster one included respondents from North America, European nations, and countries distributed throughout South America (e.g., Brazil) and Africa (e.g., South Africa) which have historical Christian backgrounds as the predominant religion. The second group contains nations such as Pakistan, Japan, and Indonesia with established Confucian and/or Islamic traditions. The last group consisted of Central and South America, as well as France and former French colonies. The correlations between the interplay of political, social, and economic determinants of each group displayed an average result that, for instance, countries with robust government administrations, were more prone to choose to hit people who were crossing the street illegally, and countries with lower economic inequality had a relatively modest gap in deciding whether to spare the poor or the rich. Even in the face of a great variety in results, according to the experiment's report, the preferences documented from respondents is a valuable contribution towards the development of a quantifiable societal expectation for ethical principles, which can be embedded into machine behavior.

This prompts other considerable issues that the engineers involved in the experiment noticed after analyzing the answers. As engineers struggle to teach AI-based systems to make these moral decisions, there comes the need to better understand how humans make ethical choices in the first place. Despite the contributions that, for instance, Self-driving Vehicles can provide in terms of easing road traffic and improving fuel efficiency, the practical ethical issues may provoke unexpected consequences for the environment and public safety. According to the experiment, the scenarios proposed to the respondents represent a small fraction of moral decisions a person would take in their driving routines. This means that the study baselines are founded in speculative circumstances that, in a real situation, drivers are likely to respond differently to the answers provided in the experiment.

Moral Math and Sustainability

Reaching ethical decisions based on mathematical conclusions pose additional hurdles in terms of social equality. As there is no universal moral rule to rely on, the presence of automated systems that spare humans from making moral determinations increases the chances of displaying judgments rooted within the systematic dominant views of society. As we saw in the Moral Machine experiment, automated systems are being trained with extensive databases containing manifold sets of opinions, but the algorithmic structure is ordered by group similarity. This means that if there are judgments that fall outside the majority, the automated conclusions will only display predominant correlations of societal values.

Machines work, by definition, through criteria and logical rules, but universal ethical behavior is full of exceptions that exceed logical patterns. In this sense, the discriminatory mathematical formula associated with AI-based systems may notably extend the abyss between a willingness to establish universal equality and the actuality of this goal. Indeed, creating a shared context of values for both humans and robots could help halt the introduction of AI into society's daily lives. However, the 'in-between' process of creating a standard rule for morals and ethics is far from being implemented.

The implementation of Sustainable Development Goals (SDGs) is a continuous dialogue to foster all nations' responsibility in promoting better living conditions for all humankind and the planet. The SDG 1, for instance, refers to eradicating poverty worldwide, commonly attributed to a universal moral imperative with the main goal to alleviate the suffering of the impoverished and marginalized. To pursue this goal, many measures need to be taken, such as including vulnerable communities and individuals in decision-making processes, thus stretching the frontiers between those who make decisions and those who experience the consequences of said arrangements. In the face of such intricacy, how will a moral engine perform ethical calculations without excluding the imperatives of minority groups of society?

To build this ethical and moral code, humans first need to establish a single set of ethical regulations for themselves before anticipating one fit for robots. As shown by Moral Machine experiment, human ethics are heterogeneous, and highly dependent on cultural and social attributes, including emotions, languages, and political beliefs.

As artificial intelligence and algorithms embed themselves into modern society, it becomes of utmost importance to ensure that the decisions made by such systems are aligned with current moral values, avoiding discrimination against specific groups, and taking sociocultural differences in ethical decision-making into account. Maybe, instead of formalizing a universal moral rule for human society and later applying it to robots, the answer could possibly lie in stimulating ethical learning for both machines and humans conjointly. The combination of rational and supra-rational capabilities could help, for example, an artificial agent to make moral decisions by itself, without resorting to the guidance of human beings. This could allow the agent to help with tasks that were previously considered "human only," including conflict management, life-or-death decision-making, or commanding personnel.

If automated systems become widespread and globally accepted, they could develop computational social sciences, helping humanity understand long-standing ethical and philosophical questions. For this to happen, algorithms that make moral decisions should be open-source and explainable instead of being an exclusive database reserved to experts. This could help resolve long-standing conflicts; if both parties (e.g., the general population vs. public administrations) know the algorithm and its data-sources, they are more likely to trust its decisions than decisions made by a human. In this scenario, tools, policies, or contracts will have the potential to evolve together with society itself, ensuring that AI-based systems always remain in touch with the culture and environment to which they belong.

Nevertheless, besides having a substantial challenge of ascertaining ethical domains for humans and machines, humankind is prone to biases, even unknowingly. For example, in 2014, the multinational company Amazon built a computer program that automatically reviewed job applicants in search of top talent. The computational model was trained to select professionals based on the resumes the company received in a 10-year period. As a reflection of an industry dominated by men, most of the curriculums were from male applicants. The professionals with resumes including words such as "woman," were downgraded, giving male professionals a systematic advantage over women.

To avoid cases such as this one, it is paramount to rigorously check these systems for algorithmic preferences or biases. An Algorithmic Bias Detection Tool could, for instance, run continuous dataset revisions to reduce unfair outcomes and recommend specific changes to how the mathematical models interpret the data, thus inducing a reparation program instead of perpetuating a centuries-old system that generates disadvantages for certain societal groups. By examining several public models, the amount of influence that sensitive variables — race, gender, class, and religion — have on data can be measured along with an estimated correlation between said variables.

What to Expect

With the increasing trend of humanizing artificial intelligence by enhancing conversational skills or by embodying it in humanoid robots, both the uncanny valley concept and the ethics of human-machine interaction arise as areas of concern for further evaluation. Science fiction has been exploring these relationships for more than a hundred years, considering that robots have been mostly used as metaphors for the externalization of problems faced among individuals of humankind.

On the other hand, when dealing with machines that reproduce many human aspects, it is the conflict between similarities and differences that require a more complex approach for the future of robotics and artificial intelligence. In the coming decades, people may have relationships with more sophisticated robots, which could lead to new forms of emotions that could complement and enhance human relationships. However, it is imperative to anticipate and discourage scenarios in which relationships could become socially destructive. The key question is not whether humans could prevent this from happening or not, but rather, what sort of human-robot relationships should be tolerated and encouraged.

To what extent humans should treat artificially intelligent agents merely as tools? Will machines ever be able to gain sufficient power to someday confront humankind? In front of this quandary, it is possible that an ideal ethical measurement will only come after years of human-machine interactions.

As humans interact with machines, the resulting metrics will give insights to sharpen the way this introduction should be made. For instance, from a young age, children could learn how to behave and treat robots while in school. Adults and late adopters, on the other hand, could be taught how to act and behave with specific training courses and educational material. By analyzing these outputs, it is expected that humans will be able to develop affinities and create a more symbiotic acquaintance for both humans and robots.